AI-driven policing has arrived

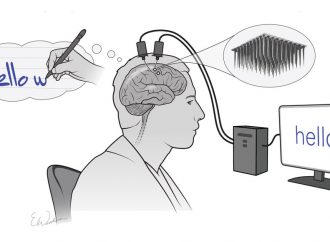

UK police in the city of Durham, England, are prepared to go live with a predictive artificial intelligence system that will determine whether a suspect should be kept in custody, according to the BBC. Called Hart, which stands for Harm Assessment Risk Tool, the system is designed to classify individuals based on a low, medium, or high risk of committing a future offense. Police plan to put it live in the next few months to test its effectiveness against cases in which custody specialists do not rely on the system’s judgement.

The AI assessment could be used for a number of different determinations, like whether a suspect should be kept for a longer length of time and whether bail should be set before or after a charge is issued. According to the BBC, Hart’s decision-making is based on Durham police data gathered between 2008 and 2013, and it accounts for factors like criminal history, severity of the current crime, and whether a suspect is a flight risk. In initial tests in 2013, in which suspects’ behavior were tracked for two years after an initial offense, Hart’s low-risk forecasts were accurate 98 percent of the time and its high-risk forecasts were accurate 88 percent of the time.

Hart is just one of many algorithmic and predictive software tools being used by law enforcement officials and court and prison systems around the globe. And although they may improve efficiency in police departments, the Orwellian undercurrents of a algorithmic criminal justice system have been backed up by troubling hard data.

In a thorough investigation from ProPublica published last year, these risk-assessment tools were found to be deeply flawed, with inherent human bias built in that made them twice as likely to flag black defendants as future criminals and far more likely to treat white defendants as low-risk, standalone offenders. Many algorithmic systems today, including those employed by Facebook, Google, and other tech companies, are similarly at risk of injecting bias into a system, as the judgement of human beings was used to craft the software in the first place.

Durham police were quick to reassure the BBC that Hart’s decisions will only be “advisory” during its preliminary and experimental use. Because the system will take into account factors like gender and postal code, law enforcement and academic advisors say it will remain incomplete and should never wholly inform decision-making.

“Simply residing in a given post code has no direct impact on the result, but must instead be combined with all of the other predictors in thousands of different ways before a final forecasted conclusion is reached,” wrote the authors of report submitted following a parliamentary inquiry on algorithmic decision-making, according to the BBC. The authors — which included Professor Lawrence Sherman, director of the University of Cambridge’s Centre for Evidence-based Policing — also say Hart will have an auditing system built in to track how it arrived at its conclusions.

Source: The Verge

Leave a Comment

You must be logged in to post a comment.