Google’s DeepMind labs trained bots play a virtual version of capture the flag, showing them how to work as a unit

Source: Smithsonian.com

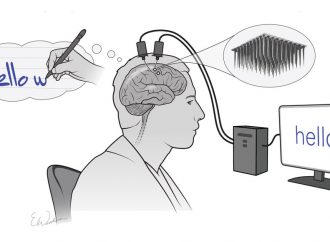

Computers have dominated humans in one-on-one games like chess for decades, but getting artificial intelligence (A.I.) to cooperate with teammates is a little trickier. Now, researchers at Google’s DeepMind project have taught A.I. players to work together on teams with both humans and other computers to compete in the 1999 video game Quake III Arena.

Edd Gent at Science reports that when A.I. only has one opponent, it usually does pretty well since it’s only anticipating the possible moves of a single mind. But teamwork is a whole different matter because it includes actions that computers aren’t traditionally good at, like predicting how a group of teammates will behave. To make A.I. truly useful, it has to learn how to cooperate with other intelligences.

Google’s DeepMind team explains in a blog post:

“Billions of people inhabit the planet, each with their own individual goals and actions, but still capable of coming together through teams, organizations and societies in impressive displays of collective intelligence. This is a setting we call multi-agent learning: many individual agents must act independently, yet learn to interact and cooperate with other agents. This is an immensely difficult problem – because with co-adapting agents the world is constantly changing.”

Multiplayer, first-person video games, in which teams of players run around virtual worlds, usually shooting guns or grenade launchers at one another, is the perfect venue for A.I. to learn the intricacies of teamwork. Each player must act individually and make choices that benefit the team as a whole.

For the study, the team trained the A.I. to play capture the flag on the Quake III Arena platform. The rules are fairly simple: Two teams face off on a maze-like battlefield. The goal is to capture as many of the other teams virtual flags while protecting their own, and whichever team captures the most flags in five minutes wins. In practice, however, things can get very complicated quickly.

The DeepMind team created 30 neural network algorithms and had them battle one another on a series of randomly generated game maps. The bots scored points by capturing flags and zapping other players, sending them back to a respawn area where their character is rebooted. At first, the actions of the bots appeared random. However, the more they played, the better they became. Any neural networks that consistently lost were eliminated and were replaced by modified versions of winning A.I. At the end of 450,000 games, the team crowned one neural network—dubbed For the Win (FTW)—as the champion.

The DeepMind group played the FTW algorithm against what’s called a mirror bots, which are missing A.I. learning skills, and then against human teams as well. FTW crushed all challengers.

The group then held a tournament in which 40 human players were matched up randomly as both teammates and opponents of the bot. According to the blog post, human players found the bots were more collaborative than their real-life teammates. Human players paired with FTW agents were able to beat the cyber warriors in about 5 percent of matches.

As they learned, the bots discovered some strategies long embraced by human players, like hanging out near a flag’s respawn point to grab it when it reappears. FTW teams also found a bug they could exploit: if they shot their own teammate in the back, it gave them a speed boost, something they used to their advantage.

“What was amazing during the development of this project was seeing the emergence of some of these high-level behaviors,” DeepMind researcher and lead author Max Jaderberg tells Gent. “These are things we can relate to as human players.”

One major reason the bots were better than human players is that they were fast and accurate marksmen, making them quicker on the draw than their human opponents. But that wasn’t the only factor in their success. According to the blog, when researchers built in a quarter second delayed reaction time into the robo-shooters, the best humans could still only beat them about 21 percent of the time.

Since this initial study, FTW and its descendants have been unleashed on the full Quake III Arena battlefield, and have shown that they can master an even more complex world with more options and nuance. They’ve also created a bot that excels at the ultra-complex strategy space game Starcraft II.

But the research isn’t just about making better video game algorithms. Learning about teamwork could eventually help A.I. work in fleets of self-driving cars or perhaps someday become robotic assistants that help anticipate the needs of surgeons, Science’s Gent reports.

Not everyone, however, thinks the arcade-star bots represent true teamwork. A.I. researcher Mark Riedl of Georgia Tech tells The New York Times that the bots are so good at the game because each one understands the strategies in depth. But that’s not necessarily cooperation since the A.I. teams lack one crucial element of human teamwork: communication and intentional cooperation.

And, of course, they also lack the other hallmark of the cooperative video game experience: trash talking the other team.

Leave a Comment

You must be logged in to post a comment.