Study funded by Facebook aims to improve communication with paralysed patients

Source: The Guardian

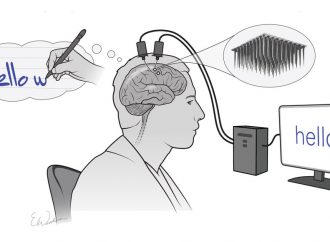

Doctors have turned the brain signals for speech into written sentences in a research project that aims to transform how patients with severe disabilities communicate in the future.

The breakthrough is the first to demonstrate how the intention to say specific words can be extracted from brain activity and converted into text rapidly enough to keep pace with natural conversation.

In its current form, the brain-reading software works only for certain sentences it has been trained on, but scientists believe it is a stepping stone towards a more powerful system that can decode in real time the words a person intends to say.

Doctors at the University of California in San Francisco took on the challenge in the hope of creating a product that allows paralysed people to communicate more fluidly than using existing devices that pick up eye movements and muscle twitches to control a virtual keyboard.

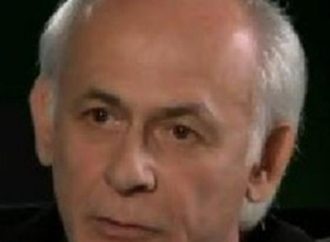

“To date there is no speech prosthetic system that allows users to have interactions on the rapid timescale of a human conversation,” said Edward Chang, a neurosurgeon and lead researcher on the study published in the journal Nature.

The work, funded by Facebook, was possible thanks to three epilepsy patients who were about to have neurosurgery for their condition. Before their operations went ahead, all three had a small patch of tiny electrodes placed directly on the brain for at least a week to map the origins of their seizures.

During their stay in hospital, the patients, all of whom could speak normally, agreed to take part in Chang’s research. He used the electrodes to record brain activity while each patient was asked nine set questions and asked to read a list of 24 potential responses.

With the recordings in hand, Chang and his team built computer models that learned to match particular patterns of brain activity to the questions the patients heard and the answers they spoke. Once trained, the software could identify almost instantly, and from brain signals alone, what question a patient heard and what response they gave, with an accuracy of 76% and 61% respectively.

“This is the first time this approach has been used to identify spoken words and phrases,” said David Moses, a researcher on the team. “It’s important to keep in mind that we achieved this using a very limited vocabulary, but in future studies we hope to increase the flexibility as well as the accuracy of what we can translate.”

Though rudimentary, the system allowed patients to answer questions about the music they liked; how well they were feeling; whether their room was too hot or cold, or too bright or dark; and when they would like to be checked on again.

Despite the breakthrough, there are hurdles ahead. One challenge is to improve the software so it can translate brain signals into more varied speech on the fly. This will require algorithms trained on a huge amount of spoken language and corresponding brain signal data, which may vary from patient to patient.

Another goal is to read “imagined speech”, or sentences spoken in the mind. At the moment, the system detects brain signals that are sent to move the lips, tongue, jaw and larynx – in other words, the machinery of speech. But for some patients with injuries or neurodegenerative disease, these signals may not suffice, and more sophisticated ways of reading sentences in the brain will be needed.

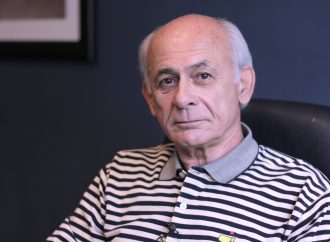

While the work is still in its infancy, Winston Chiong, a neuroethicist at UCSF who was not involved in the latest study, said it was important to debate the ethical issues such systems might raise in the future. For example, could a “speech neuroprosthesis” unintentionally reveal people’s most private thoughts?

Chang said that decoding what someone was openly trying to say was hard enough, and that extracting their inner thoughts was virtually impossible. “I have no interest in developing a technology to find out what people are thinking, even if it were possible,” he said.

“But if someone wants to communicate and can’t, I think we have a responsibility as scientists and clinicians to restore that most fundamental human ability.”

Leave a Comment

You must be logged in to post a comment.